Introduction

AI is Transforming Business – Are We Ready for the Compliance Challenges?

Artificial Intelligence (AI) is revolutionizing industries, driving automation, and reshaping customer experiences. But with its rapid growth comes a pressing need for responsible use. Ensuring AI systems are ethical, fair, and compliant with regulations is critical in 2024.

Why AI Compliance Matters More Than Ever in 2024

As AI becomes more powerful, businesses must ensure their systems don’t violate privacy, discriminate, or operate unfairly. Complying with legal frameworks not only avoids penalties but also builds trust with customers and stakeholders.

The Key Rules Governing AI

Laws like the EU AI Act and GDPR set strict standards for how AI systems are categorized, used, and monitored. These regulations help protect personal data and ensure that high-risk AI systems, like those in healthcare, operate safely. Global standards like ISO/IEC 42001 also guide businesses in managing AI responsibly.

In this article, we’ll explore how companies can navigate these regulations and ensure their AI systems meet both ethical and legal standards in 2024.

AI Compliance: The Legal Landscape

As artificial intelligence becomes more integrated into our lives, many governments are creating laws to ensure AI is used responsibly. Several laws and guidelines have been created to make sure AI is used correctly. For example, the EU AI Act is a law that places AI into different risk categories and ensures high-risk AI systems, like those used in healthcare, are closely regulated. Another key rule is the General Data Protection Regulation (GDPR), which protects personal data and ensures it’s used responsibly. Global standards like ISO/IEC 42001 are also setting the stage for businesses to handle AI carefully.

Comparison of Global AI Regulations

| Region/Country | Key Regulation | Focus | Privacy Focus | Enforcement Level |

|---|---|---|---|---|

| EU | EU AI Act | Risk-based categorization, high-risk regulation | GDPR (Data protection) | Strict |

| U.S. | Algorithmic Accountability Act (Proposed) | Algorithm transparency, fairness, bias checking | CCPA/CPRA (Data rights) | Proposed/State-specific |

| China | Provisions on AI and Media | Regulating AI-generated content, deepfakes | Deep synthesis regulations | High for AI media |

Country-Specific Laws

Different countries have their own specific laws to regulate AI. Here are a few examples:

- United States:

- CCPA (California Consumer Privacy Act) and CPRA (California Privacy Rights Act): These laws give people the right to control how their data is used, including in AI systems. They ensure that companies must inform users if AI is involved in making decisions based on their data.

- AI Video Interview Act (Illinois): This law makes sure that if AI is used in job interviews to analyze candidates, employers must first get the candidate’s permission and tell them how the AI works.

- China:

- China has introduced laws like the Provisions on the Administration of Deep Synthesis of Internet Information Services, which govern AI-generated media, including deepfakes. This law is designed to prevent harmful content and protect users.

- United Kingdom:

- The UK has guidelines on how AI should be used with respect to privacy and data protection, similar to GDPR. The ICO Guidance on AI and Data Protection helps companies understand how to use AI while following the country’s privacy laws.

These laws aim to balance the innovation of AI with the protection of people’s rights, ensuring that AI is used fairly and ethically around the world.

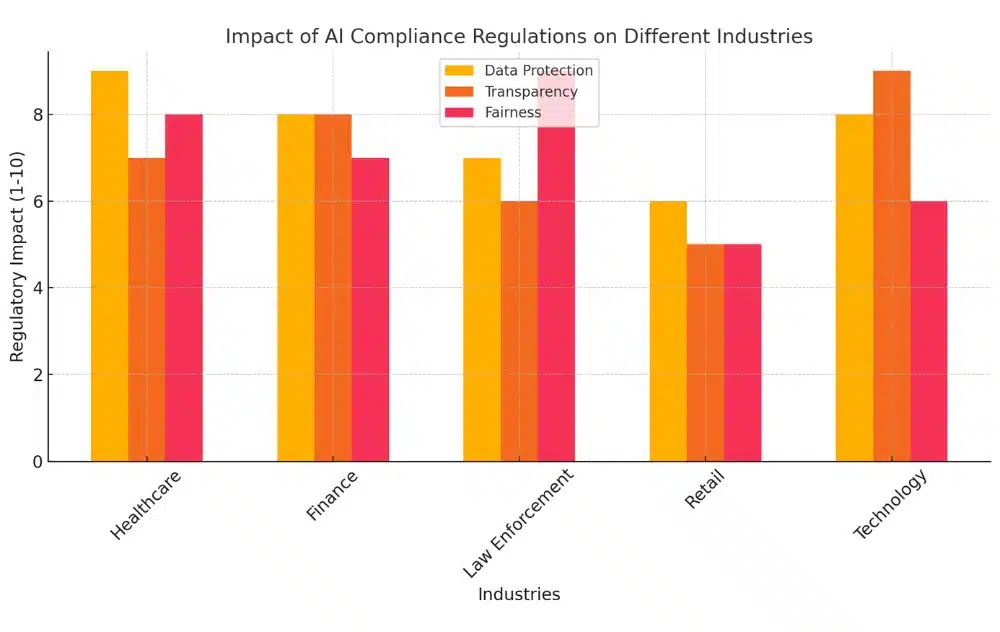

Key Components of AI Compliance

Data Protection and Privacy

When it comes to AI compliance, protecting personal data is one of the most critical issues. Laws like GDPR (General Data Protection Regulation) in Europe and CCPA (California Consumer Privacy Act) in the U.S. provide strict rules for how businesses can collect, store, and use personal information, especially in AI systems.

- How AI Compliance Aligns with Data Privacy Laws: Both GDPR and CCPA require that individuals’ personal data be handled with care. When AI systems are involved, companies must ensure that data is protected from unauthorized access and misuse. For example, GDPR gives individuals the right to know how their data is being used, and they can request that their data be deleted if they feel it’s being mishandled.

- Importance of Safeguarding Personal Data: AI systems often rely on large datasets, which may include sensitive information. If this data isn’t well-protected, it could lead to privacy breaches, where personal information is stolen or misused. For this reason, businesses must take extra steps to secure the data that AI systems use, such as encryption and anonymization.

Algorithmic Transparency

For AI systems to be trusted, they must be transparent and explainable. This means that people should be able to understand how AI makes decisions and what factors influence its outcomes.

- Why AI Systems Must Be Transparent: When AI systems are involved in important decisions (like approving loans or hiring employees), it’s essential to know how those decisions are made. If an AI system isn’t transparent, people may feel that decisions are unfair or biased.

- Building Trust and Accountability: By making AI systems transparent, companies can build trust with users. It shows that the AI isn’t a “black box” and that it operates fairly. Transparency also ensures accountability, as companies can explain and justify decisions made by AI when required.

Bias and Fairness in AI

AI systems can sometimes make biased decisions if they’re trained on datasets that reflect existing societal biases. This can lead to unfair outcomes, such as discriminating against certain groups of people.

- Addressing the Risk of Bias: One of the biggest challenges in AI is preventing bias. Bias in AI can occur if the data used to train the system is skewed or unrepresentative. For instance, if an AI is trained on hiring data that favors certain groups, it may continue to favor those groups, leading to unfair hiring practices.

- Ethical Considerations for Eliminating Discrimination: To ensure fairness, companies need to carefully examine the data they use to train AI systems. They must remove any biased patterns and continuously monitor the AI’s decisions to make sure it doesn’t develop unfair behaviors over time. Ethical AI development also requires that systems are regularly audited for fairness and that human oversight is included in the decision-making process.

These key components—data protection, transparency, and fairness—are essential for ensuring that AI systems are compliant, ethical, and trustworthy.

Ethical Governance of AI Systems

The Role of Ethics in AI Compliance

Ethics play a vital role in ensuring that AI systems operate fairly, transparently, and responsibly. When companies use AI for decision-making, it’s essential that these systems reflect core societal values like fairness, equality, and accountability. Without a focus on ethics, AI systems can inadvertently cause harm, such as reinforcing biases, violating privacy, or making decisions without accountability.

- Fairness: Ethical AI ensures that decisions made by AI are unbiased and do not discriminate against any group based on gender, race, or socioeconomic status.

- Accountability: Companies must take responsibility for the outcomes of their AI systems. If an AI system makes a mistake or causes harm, there should be clear processes in place to fix it and prevent it from happening again.

Frameworks for Governing AI Ethically

Several frameworks are being developed to guide companies in the ethical use of AI. These frameworks help businesses ensure that their AI systems are transparent, fair, and safe for use in society.

- AI Ethics Guidelines: Some organizations, such as the EU and the OECD, have developed guidelines that companies can follow to ensure ethical AI practices. These guidelines emphasize fairness, accountability, transparency, and human oversight.

- Bias Mitigation Tools: To avoid biased outcomes, businesses can use tools like fairness testing frameworks. These tools can evaluate AI algorithms to identify and eliminate biased patterns in decision-making.

How Companies Can Implement Ethical Guidelines

For businesses to ensure that their AI systems are ethical, they need to adopt several practical steps:

- Human Oversight: AI should never operate in isolation, especially when it comes to decisions that impact people. Having human oversight ensures that AI systems can be monitored, corrected, or adjusted when necessary. For instance, if AI is used in hiring, human reviewers should be able to step in to evaluate questionable decisions.

- Transparency: Companies must be transparent about how their AI systems work. This includes explaining the data used, the algorithms applied, and the reasons behind AI decisions. Being transparent helps users understand and trust the system.

- Continuous Monitoring: AI systems must be regularly audited to ensure that they remain fair and ethical over time. This helps catch any potential biases or unintended consequences that may arise as the AI continues to learn and adapt.

By embedding these practices into their AI governance strategies, companies can build AI systems that not only comply with legal standards but also align with the ethical expectations of society.

Generative AI and Compliance Challenges

Challenges Posed by Generative AI

Generative AI, a type of AI that can create new content such as text, images, or even simulations, poses unique challenges for compliance. While it offers exciting possibilities for innovation, it also raises significant risks:

- Creating Simulations: Generative AI can produce highly realistic simulations that may be used in sensitive industries like healthcare or law. However, these simulations could mislead users if they are not clearly identified as AI-generated, raising ethical and legal concerns about authenticity and transparency.

- Potential Biases: Like other AI models, generative AI can inadvertently produce biased content if it’s trained on biased data. For example, if a generative AI model is trained on biased historical data, it may generate outputs that reflect those same biases, resulting in discriminatory outcomes.

Compliance Workflow for Generative AI

To address these challenges, businesses can follow a structured compliance workflow. The table below outlines the key steps involved in ensuring compliance when using generative AI:

| Content Creation | AI generates new content, such as text or simulations. |

| Bias Testing | Analyze the content for potential biases, including gender, racial, and economic. |

| Content Labeling | Clearly label the AI-generated content to ensure transparency. |

| Human Oversight | Human experts review the AI’s outputs for accuracy and fairness. |

| Final Audit | A compliance audit ensures that all legal and ethical standards are met. |

Solutions for Ensuring Ethical Use of Generative AI

To address the risks associated with generative AI, companies need to implement strategies that promote ethical and transparent use:

- Labeling AI-Generated Content: One of the simplest solutions is to require clear labeling of AI-generated content. This helps users understand when they are interacting with a machine rather than a human, ensuring transparency.

- Bias Testing and Audits: Just like other AI systems, generative AI should undergo regular audits to check for bias in its outputs. Businesses should also continuously refine the datasets they use to train generative models, ensuring they are balanced and representative.

- Human Oversight: In industries where generative AI is used to create important simulations or recommendations (such as in healthcare), human experts should review the AI’s outputs to ensure accuracy and appropriateness.

AI in Compliance: Practical Applications

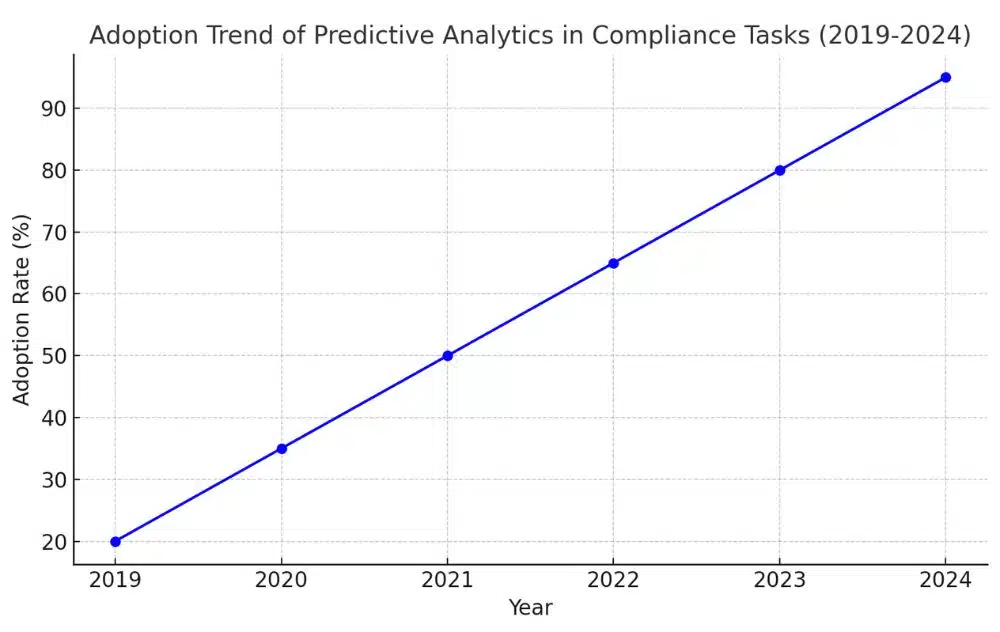

Predictive Analytics in Compliance

AI is becoming an indispensable tool for improving compliance efforts, particularly through the use of predictive analytics. Predictive models help companies forecast risks and identify potential compliance issues before they become problematic.

- How Predictive Models Help Forecast Compliance Risks: AI can analyze historical data and detect patterns that indicate potential compliance risks. For example, it can spot trends in financial transactions that may signal money laundering or flag anomalies in data that suggest non-compliance with industry standards.

- Practical Examples of AI Automating Compliance Tasks: Many companies are now using AI to automate routine compliance tasks. For instance, AI can automatically monitor transactions to ensure they comply with anti-money laundering (AML) regulations or scan employee communications for potential breaches of internal policies. AI-driven systems can also generate compliance reports automatically, saving businesses time and reducing the chance of human error.

Monitoring and Auditing AI Systems

To ensure AI systems remain compliant with regulations and ethical standards, businesses need to continuously monitor and audit their AI models.

Best Practices for Monitoring and Auditing AI Systems

| Best Practice | Description |

|---|---|

| Regular Audits | Conduct regular audits to ensure AI compliance with regulations and ethical standards. |

| Bias Detection Tools | Use bias detection tools to identify and correct biased patterns in AI outputs. |

| Monitoring System Performance | Continuously monitor AI system performance to ensure consistency and accuracy. |

| Transparency Reporting | Provide transparency reports on how AI systems make decisions and handle data. |

| Periodic Updates to AI Models | Update AI models periodically to address evolving risks and improve compliance. |

- Tools and Methods for Monitoring Compliance: There are several tools available to help companies monitor their AI systems. For instance, AI fairness and bias detection tools can analyze an AI system’s outputs to identify any patterns of bias. Other tools can track the performance of AI systems over time, ensuring they operate as intended and don’t deviate from compliance guidelines.

- The Importance of Regular Audits and Updates: AI systems are dynamic and can change as they learn from new data. This makes regular audits essential for ensuring that AI models continue to comply with evolving regulations and standards. Businesses should also update their AI systems regularly to address any new compliance risks or ethical concerns that may arise.

By using predictive analytics and continuously monitoring AI systems, businesses can not only improve their compliance efforts but also create more trustworthy and reliable AI models.

Best Practices for Implementing AI Compliance

Pre-Implementation Risk Assessment

Before deploying any AI system, it’s crucial to evaluate potential risks to ensure it meets both legal and ethical standards.

- Importance of Evaluating Risks: AI systems can be complex, and without careful planning, they may introduce risks such as privacy violations, bias, or unintended consequences. A pre-implementation risk assessment helps identify these issues early on, allowing businesses to adjust their models and processes before they cause harm. It’s important to assess how AI will handle sensitive data and whether it could produce biased or discriminatory outcomes. High-risk applications, like those in healthcare or finance, need even more rigorous checks.

Collaboration Between Compliance and IT Teams

For AI compliance to be effective, there needs to be strong collaboration between compliance officers and IT professionals.

- Why Collaboration is Key: Integrating AI into compliance strategies requires technical expertise to understand how AI models work and legal knowledge to ensure these models meet regulatory requirements. The compliance team can provide insights into legal risks and industry standards, while the IT team can explain the technology behind AI systems and ensure proper implementation. Working together, they can develop AI solutions that are both effective and compliant, avoiding issues like data breaches or biased outcomes.

Training and Documentation

Ensuring that teams understand how AI works and keeping detailed records of AI activities is essential for ongoing compliance.

- Training Compliance Teams: Compliance officers need to be well-versed in the AI tools their company uses. This means providing regular training so that they can understand how AI makes decisions and how to monitor it for compliance. Employees must also know how to flag potential issues or biases in the AI’s decision-making processes.

- Maintaining Robust Documentation: AI systems are often evolving, and it’s important to document how they are trained, how they function, and how they are updated. Having clear documentation helps companies show regulators that they are following best practices and adhering to compliance standards. It also makes it easier to audit the AI system if any issues arise, ensuring transparency throughout its lifecycle.

By following these best practices—assessing risks, fostering collaboration between teams, and keeping up with training and documentation—companies can effectively manage the complexities of AI compliance and ensure their systems are both legally and ethically sound.

Future of AI Compliance

Emerging Trends: AI and Blockchain for Enhanced Transparency

One exciting trend in AI compliance is the integration of AI with blockchain technology. Blockchain is known for its transparency and immutability, making it a valuable tool for tracking how AI systems make decisions and handle data. By storing AI decision-making processes on a blockchain, companies can create an auditable trail that regulators and stakeholders can trust. This ensures that AI systems operate transparently, and any changes made to the system can be easily tracked and reviewed.

For example, companies could use blockchain to record the data used to train AI models and document how those models evolve over time. This provides clear visibility into how AI systems operate, reducing concerns about “black box” algorithms. The combination of AI and blockchain could be particularly useful in industries like finance, where regulatory requirements demand clear records of every decision made by AI systems.

The Evolution of AI Regulations

As AI continues to grow and become more integrated into daily life, we can expect more stringent regulations to emerge. Governments around the world are already discussing how to regulate AI in a way that balances innovation with public safety and fairness.

- Stricter Guidelines on High-Risk AI: We can expect to see more regulations focusing on high-risk AI systems, such as those used in healthcare, law enforcement, and financial services. These systems have the potential to significantly impact people’s lives, so governments will likely impose more rigorous testing and transparency requirements.

- Global Standardization: Another trend will likely be the push for global AI standards. As AI is used across borders, there will be a need for unified regulations that ensure AI systems are safe and fair no matter where they’re used. Organizations like the ISO (International Organization for Standardization) are already working on developing such frameworks.

- AI Ethics and Human Rights: With growing concerns about the ethical use of AI, future regulations will likely emphasize human rights and ethical governance. This could involve rules ensuring that AI systems respect privacy, avoid discrimination, and include human oversight in critical decision-making processes.

In the coming years, businesses can expect that AI regulations will become stricter and more detailed, requiring more transparency, accountability, and responsibility in how AI systems are designed, deployed, and maintained. Staying ahead of these trends will be crucial for companies to ensure compliance and maintain trust with their customers and stakeholders.

Conclusion

As AI continues to revolutionize industries, the balance between innovation and compliance has never been more important. AI offers incredible potential to drive efficiency and solve complex problems, but this potential must be harnessed responsibly. Businesses need to ensure that their AI systems operate within the bounds of legal regulations and ethical standards, safeguarding privacy, ensuring fairness, and maintaining transparency in decision-making.

The key to success in 2024 and beyond lies in adopting AI technologies while staying committed to compliance. This means performing regular audits, incorporating human oversight, and adhering to global and country-specific regulations. Companies that embrace these principles will not only avoid regulatory risks but also build trust with their customers and stakeholders.

It’s time for businesses to take action—adopt AI responsibly by embedding compliance into the heart of your AI strategies, ensuring that innovation and ethics go hand in hand.

References and Further Reading

- EU AI Act Overview

European Commission. The Artificial Intelligence Act.

Read more - General Data Protection Regulation (GDPR)

European Union. General Data Protection Regulation (GDPR).

Read more - ISO/IEC 42001 and AI Governance

International Organization for Standardization. ISO/IEC 42001 for AI Risk Management.

Read more - Algorithmic Accountability Act

U.S. Congress. Algorithmic Accountability Act of 2022 (Proposed).

Read more - AI and Ethical Guidelines

OECD. OECD Principles on Artificial Intelligence.

Read more - California Consumer Privacy Act (CCPA) and Privacy Rights Act (CPRA)

California Attorney General’s Office. CCPA & CPRA Overview.

Read more - AI in Compliance and Risk Management

Gradient Ascent. Streamlining Regulatory Compliance with AI.

Read more - Navigating the Landscape of AI Regulations

ACL Digital. Understanding Key AI Regulatory Compliance.

Read more

These sources will provide a deeper understanding of the legal, ethical, and compliance challenges surrounding AI technologies and how businesses can navigate them effectively.